Agile Email Testing

“It depends; you should test that” has to be the most frequently used answer to any email marketing question.

And it’s not entirely unreasonable. What’s right for one for one brand isn’t for another.

However, whilst email testing is very frequently recommended there is virtually no sound advice around email test methods. Typical advice is; just take 20% of your list, run an A/B split test and send the best to the rest.

I hear from people who tried this process a couple of times, didn’t see a huge benefit, became disillusioned with the outcome and stopped testing. Or from people who ran a test, came to a (wrong) conclusion and dumped the wrong email.

Many of the email solutions with built in test support don’t help, they allow cell sizes that are too small to be picked and then declare a winner when there is none.

Take this simple example of how it goes wrong.

An A/B test is run. The A cell gets a click rate of 5% and the B cell gets a 6% click rate.

Sounds great, right? A satisfying 20% uplift with the B cell, declare the winner, tell the boss you’re the hero and roll out the B variant.

How can that be a wrong conclusion? The first issue is the cell size was 100 contacts. That 1% point difference means one extra person clicked on B vs A.

That one extra click can just as easily be random variation. Ever played a dice game and found in a row of 10 goes you never got the illusive six you needed? Did that make you conclude the die was loaded? Of course not, everyone gets randomness when tossing coins and throwing dice. Testing is no different.

If you are running simple A/B tests make sure you use one of the free online statistical significance calculators to check your results.

All tests should start with consideration of the cell size. There is no single answer to the correct size for a test cell. I rarely run tests with cells under 5,000 contacts per cell and sometimes use 50,000 to 100,000 contacts per cell.

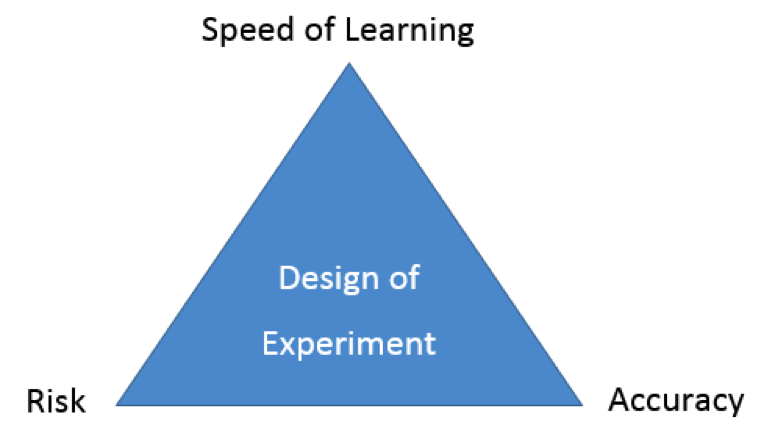

The right size for your test depends on the level of test risk, desired speed of learning and the accuracy of results required. Do you want to minimize impact of a poorly performing cell? Do you want to iterate and learn quickly from testing? Do you want to be able to declare a 5% uplift as statistically valid? Sorry to say you can’t have all of these.

If you want to learn quickly then use small cell sizes and run lots of tests, if you want to get statistically valid results for small uplifts then large cell sizes are needed. Trade-offs have to be made when designing tests. One approach is to use a two pass method. A first pass which aims looks for maximum speed and a second pass to gain accuracy.

I will be speaking on how to make these choices at the Email Innovations Summit in May as well as managing a few more threats to testing.

Including another piece of often cited classic advice, ‘test one thing at a time’. I’ll be covering why this can be exactly the wrong thing to do and how to take an agile approach to email test and optimization.

I hope you can join me at the Email Innovations Summit in Las Vegas May 18th.

How to resolve AdBlock issue?

How to resolve AdBlock issue?