Email Insights: The Blueprint for Better Performance Testing for New Products and Services

In “The Blueprint for Better Performance Testing” I walked you through how I look at an existing email campaign to come up with hypotheses for testing.

But what do you do when you don’t have an existing campaign to look at – what do you do when you’re developing a performance testing plan for a brand-new product or service?

Here it’s even more important to do performance testing – but the process of determining what to test is different. Very different.

In addition to not having an existing campaign to ‘riff’ off of, you need to be thinking bigger picture with your testing. You’ve got to be looking for learnings that will build the foundation for all your future marketing efforts.

There’s also a lot more at stake. If you fail to boost performance on an existing campaign, it’s a bummer. But if you’re starting from nothing and you fail to build an email marketing campaign that meets the business goals, your new product or service could be ‘retired’ before it reaches its first birthday.

When you’re in launch mode you are trying to figure out the best way to market this new product or service to your audience, not just today but for the long-term. The qualitative learnings you get along the way are just as important, perhaps more important, than how quickly you meet your quantitative business goals.

To get these long-term learnings, you need to think in terms of formulas and directions, as opposed to specific language and details.

Where to start? I like to make a list of the learnings we’d like to get – basically all the questions we want to answer during the test period. They need to have some grounding in the formulaic and directional approach we just discussed; if they are completely open-ended they won’t be useful. For instance:

“We want to learn how to best describe this new service to potential customers” is a start, but we need to add some direction to make it useful.

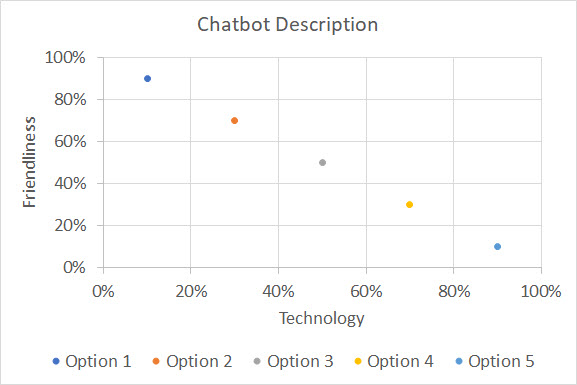

Let’s say the new service is a chatbot which provides information in response to natural language text messages you send to it; it is conversational and learns about you and your needs the more you communicate with it. Two key elements (there may be more) are the technology of it (artificial intelligence) but also the friendliness of it (conversational tone). So, you might come up with a positioning chart for the description that looks like this:

The idea here is to test different description options and see which ‘mix formula’ is most appealing to the target audience. Option 1 devotes about 90% of the description to friendliness and only about 10% to the technology of it. Option 3 is evenly split between the two, while Option 5 focuses heavily on technology, only mentioning the friendliness in passing. We might think of Option 1 as the “Friendly Assistant” angle while Option 5 is an “Artificial Intelligence” play.

The idea here is to begin to get a feel – a formula – for how much to stress each of the key elements of the offering. I would begin by writing copy for Option 3, where it’s evenly split, and then version that for the other 4 options, keeping the specific language as similar as possible between options but shifting the amount of copy devoted to each element (and perhaps the placement of it).

At the end of the test you will have specific language which won – but you will also have a feel for the best ‘mix’ of elements, which will provide a blueprint for future copywriting and give you a way to continue testing the description to optimize it.

You could also do focus group testing to try to get to these kinds of learnings, but be cautious. Focus group testing can be expensive and sometimes there’s a disconnect between what people say and how they behave. If you test this way and use actual conversions (in this case, sign-ups to use the chatbot) as your key performance indicator, you’re basing your decision on how your audience actually behaves, not on how they tell you they will behave.

Side Note: be sure to use a business metric, like revenue generated, sign-ups or active users, as your key performance indicator (KPI) for your tests. You need to divide that figure by the number of emails sent – not by the number of opens or clicks, to get a true read on how each test cell performs. You can also use Return-on-Investment (ROI) or Return-on-Ad-Spend (ROAS) as your KPI, if you are able to calculate it.

It’s rare that an open or a click is your end game – you want to goal to what you need the customer to do to meet your business goals, not a step on the way there. Sometimes a subject line is great at getting people to open the email, but it doesn’t do a good job of teeing them up for the ultimate action you need them to take. Ditto with clicks; a higher click-through rate doesn’t guarantee a higher conversion rate or higher revenue.

Opens and clicks are diagnostic metrics – they are great at helping you determine what you need to do to improve your performance. But they themselves are rarely KPIs. This is true whether you’re looking to optimize performance on a new or an existing product/service. If you declare a winner based on opens or clicks you could be taking your program in the wrong direction rather than optimizing it.

Sometimes different description options appeal to different target audiences; if you can segment this way on your larger list, that’s fine and a useful learning. You should always back test key results with different specific copy, to confirm your conclusions.

You’ll want to use a similar approach to get the same type of broad learnings about all the elements of your marketing. For instance,

“Is it enough to include a bullet-point list of the features (in this case, the information the Chatbot can provide), or do we need to include copy about benefits and/or advantages along with each feature?”

Here your testing options might be:

- Features only in a bullet-point list

- Features, each with 1 related benefit, in a bullet-point list

- Features, each with 1 related advantage, in a bullet-point list

- Features, each with a related benefit and a related advantage, in a bullet-point list

To get a read on the formula, you would start by writing option 4, then just pare it back for options 1, 2 and 3.

Once you get a read on how much information you need to include about each feature, you can take the next step and test which features and/or mix of features are more effective.

Taking a systematic approach like this ensure you cover all options and will set you up for success both during and after the launch period. Try it with your next new product/service campaign and let me know how it goes!

How to resolve AdBlock issue?

How to resolve AdBlock issue?